Daniel Ari Friedman leads this Math 4 Wisdom study group

- Daniel Ari Friedman

- Andrius Kulikauskas

- Epistemological portraits

- Translating ontologies

- Evolution

More Links

Andrius: A project is to translate the ontology of Active Inference into the language of Wondrous Wisdom.

Active Inference Ontology

Translating Core Terms from Active Inference into Wondrous Wisdom

The terms and definitions below come from Active Inference Ontology thanks to Daniel. Andrius is providing analogous terms from Wondrous Wisdom along with explanations.

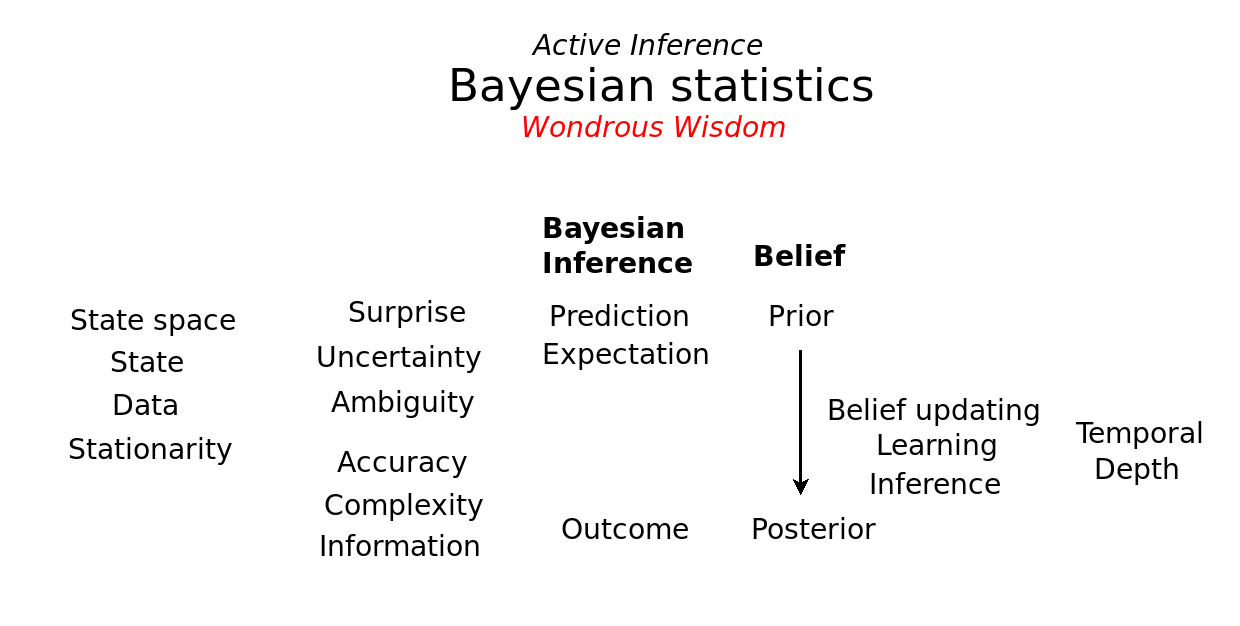

Bayesian statistics

| Active Inference Term | Definition | Wondrous Wisdom Term | Explanation |

| Accuracy | Broad sense: how “close to the mark” an Estimator is. Narrow sense: the expected or realized extent of Surprise on an estimation, usually about Sense State reflecting theRecognition density | ||

| Ambiguity | Broad sense: Extent to which stimuli have multiple plausible interpretations, requiring priors &/or Action for disambiguation Narrow sense: Specific model parameter used to model Uncertainty, usually about sensory Perception . | ||

| Bayesian Inference | As opposed to frequentist analysis, Bayesian Inference uses a specified Prior or Empirical prior to Update the distributional Posterior | ||

| Belief | Broad sense: Felt sense by an Agent of something being true, or confidence it is the case. Narrow sense: the State of a Random variable in a Bayesian Inference scheme. | ||

| Belief updating | Belief updating is changes in a Bayesian Inference Belief through time. | ||

| Complexity | The extent to which an Agent must revise a Belief to explain incoming Sensory observations. The Kullback-Leibler Divergence between the Prior and Posterior which is used in Bayesian model selection to find the simplest (least complex) model and avoid overfitting on the noise inherent in Sensory observations. | ||

| Data | Data are a set of values of qualitative or quantitative variables about one or more Agent or object . | ||

| Expectation | Within a Bayesian Inference framework, Expectation is an Estimator about future timesteps | ||

| Inference | Process of reaching a (local or global) conclusion within a Model, for example with Bayesian Inference. The process of using a Sensory observation (observed variable, data) along with a known set of parameters to determine the state of an unknown, Latent cause (unobserved variable). | ||

| Information | Measured in bits, the reduction of Uncertainty on a Belief distribution of some type. Usually Syntactic (Shannon) but also can be Semantic (e.g. Bayesian ). | ||

| Learning | Broad sense: Process of an Agent engaged in Updates to Cognition (and possibly) Behavior. Narrow sense: Process of Bayesian Inference where Generative Model parameters undergo Belief updating | ||

| Outcome | If we consider the environment as a Generative Process that can be sampled when in a particular state, the statistical result (Data, Sensory observation) of the sampling is known as the outcome. The Data produced by sampling a Generative Process (a joint distribution on states and outcomes). | ||

| Posterior | The Update to the Prior after Observation has occurred In Bayes’ theorem, the Posterior is equal to the product of the Likelihood and Prior divided by the model evidence. | ||

| Prediction | An Estimator about a State in a Model at a future time. The process of using a learned Generative Model to forecast what value a future Hidden State will be. | ||

| Prior | The initial or preceding state of a Belief in Bayesian Inference, before Sensory Data (Observation or Evidence ) occurs. | ||

| State | is the statistical, computational, or mathematical value for a parameter within the State space of a Model . | ||

| State space | Set of variables/parameters that describe a System . A state space is the set of all possible configurations of a system | ||

| Stationarity | Of a Random variable , that it is described by parameters that are drawn from a Gaussian distribution and unchanging over the time horizon of analysis. | ||

| Surprise | In Bayesian Inference, Surprise is the negative log evidence, directly corresponding to the inverse of probability (high probability, low surprisal; low probability, high surprisal). The proxy that bounds surprisal is the difference between Prior and Posterior Distribution — how “surprising” Sensory Data are to the Generative Model of the Agent. Surprise (also known as Surprisal or self-Information), is a quantity that, according to the Free Energy Principle, must be minimized in order for an Agent to survive; Variational Free Energy provides an upper bound on Surprisal and is minimized instead of minimizing Surprisal directly. | ||

| Temporal Depth | The length of a time window or horizon considered (longer time → deeper / more Temporal Depth ) | ||

| Uncertainty | In Bayesian Inference , a measure of the Expectation of Surprise (Entropy) of a Random variable (associated with its variance or inverse Precision ) Random fluctuations around the true value of a quantity we are trying to measure that are a result of uncontrolled variables in the environment or measurement apparatus. |

Action

| Action | Broad sense: The dynamics, mechanisms, and measurements of Behavior Narrow sense: The sequence of Active States enacted by an Agent via Policy selection from Affordance | ....... | ....... |

| Action Planning | The selection of an Affordance based upon Inference of Expected Free Energy | ||

| Agency | The ability of an Agent to engage in Action in their Niche and enact Goal-driven selection or Policy selection based upon Preference | ||

| Behavior | The sequence of Action that an Agent is observed to enact. | ||

| Policy | Sequence of Actions, reflected by series of Active States as implemented in Policy selection which is Action Prediction or Action and Planning as Divergence Minimization | ||

| Policy selection | The process of an Agent engaging in Action Planning from set of Affordance, in Active Inference based upon minimization of Expected Free Energy | ||

| Preference | parameter in Bayesian Inference Markov Decision Process that ranks or scores the extent to how an Agent values Sensory input . |

Free Energy

| Active Inference | Active Inference is a Process Theory related to Free Energy Principle . | ...... | ...... |

| Epistemic value | is the value of Information gain or Expectation of reduction in Uncertainty about a State with respect to a Policy, used in Policy selection | ||

| Expected Free Energy | Measure for performing Inference on Action over a given time horizon (Policy selection , Action and Planning as Divergence Minimization ). The two components of Expected Free Energy are the imperative to satisfy Preferences, and the penalty for failing to minimize Expectation of Surprisal. | ||

| Free Energy | Free Energy is an Information Theoretic quantity that constitutes an upper bound on Surprisal . Free Energy can refer to various or multiple sub-types of Free Energy: * Variational Free Energy * Expected Free Energy * Free Energy of the Expected Future * Helmholtz Free Energy * .... | ||

| Free Energy Principle | A generalization of Predictive Coding (PC) according to which organisms minimize an upper bound on the Entropy of Sensory input (or sensory signals) (the Free Energy). Under specific assumptions, Free Energy translates toPrediction error . A set of statistical principles that describe how Agents can maintain their self-organization in the face of random fluctuations from the environment. | ||

| Generalized Free Energy | Past Variational Free Energy plus future Expected Free Energy (each totaled over Policy). | ||

| Pragmatic Value | Pragmatic Value is the benefit to an organism of a given Policy or Action , measured in terms of probability of a Policy leading to Expectation of Random variable values that are aligned with the Preference of the Agent Pragmatic value describes the extent to which a given action is aligned with rewarding preferences over sensory outcomes. | ||

| Variational Free Energy | Measure that performs e.g. Inference on Sensory Data given a Generative Model , F. The two sources that composeVariational Free Energy are Model overfitting and Model accuracy |

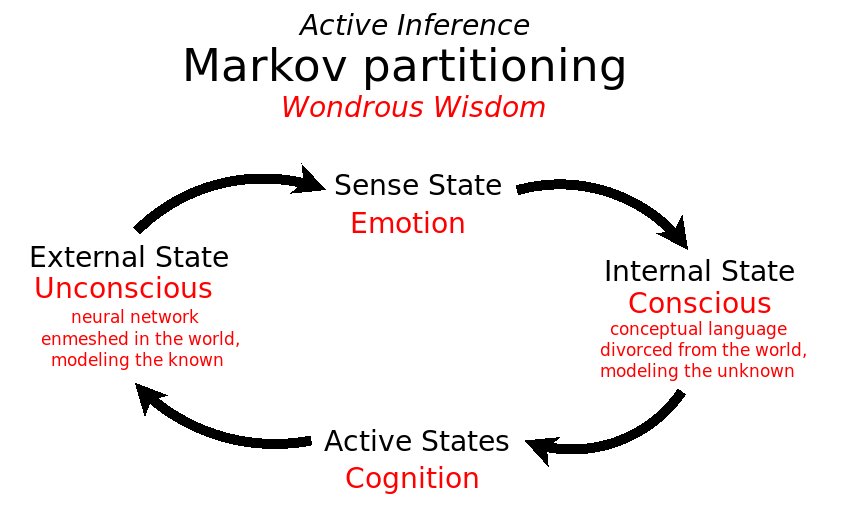

Markov partitioning

In Wondrous Wisdom, the crucial division is not between the self and the world, but between the unconscious and the conscious. The unconscious is considered part of the world, enmeshed within it and speaking for it. Thus the unconscious is understood as the External States and the Conscious is understood as the Internal States.

| Active States | In the Friston Blanket formalism, the Blanket State are the Sense State (incoming Sensory input) and Active States (outgoing influence of Policy selection ) | ........... | ............ |

| Blanket State | Set of states in the Markov Blanket Partition that make Internal State and External State have Conditional Probability that are independent. | ||

| External State | States with Conditional density independent from Internal State, conditioned on Blanket State. | ||

| Friston Blanket | Markov Blanket with partitioned Active States and Sense State . | ||

| Internal State | States with Conditional density independent from External State , conditioned on Blanket State. | ||

| Markov Blanket | Markov Partitioning Model of System, reflecting Agent as delineated from the Niche via an Interface. The Markov Blanket Blanket State reflect the State(s) upon which Internal State and External State are conditionally independent. | ||

| Markov Decision Process | Bayesian Inference Model where Agent Generative Model can implement Policy selection on Affordances reflected by Active States, while other features of the Generative Process are outside the Control (states) of the Agent . | ||

| Sense State | In the Friston Blanket formalism, the Blanket State are the Sense State (incoming Sensory input) and Active States (outgoing influence of Policy selection ) |

Agents in the Niche

| Affordance | Options or capacities for Action by an Agent (sometimes called “Affordance 3.0”) From Ecological Psychology, the Perception of a possibility for Action (sometimes called “Affordance 2.0”). | ...... | ...... |

| Agent | Entity as modeled by Active Inference , with Internal State separated from External State by Blanket State | ||

| Cognition | An Agent modifying the weights of its Internal State for the purpose of Action Planning and/or Belief updating . (This is a @realistCounterpart of Goal-driven selection .) | ||

| Ensemble | Group of more than one Agent. | ||

| Generative Model | A formalism that describes the mapping between Hidden State, and Expectations of Action Prediction , Sensory outcome . Recognition Model Update Internal State parameter that correspond to External State (including Hidden State causes of environment states), Blanket State , and Internal State (meta-modeling). In contrast, Generative Model take those same Internal State parameter Estimator and emit expected or plausible observations. | ||

| Generative Process | Underlying @dynamical process in the Niche giving rise to Agent Observation and @agent Action Prediction Enactive ecological process using morphological computing processes where the Niche Regime of Attention @morphogenesis and generative model interact to create an embodied learning dyanamic. | ||

| Niche | Ecology System constituting the Generative Process (as Partitioned from the Agent who instantiates a Generative Model). | ||

| Non-Equilibrium Steady State | Technically, a Non-Equilibrium Steady State requires a solution to the Fokker Planck equation (i.e., density dynamics). A nonequilibrium steady-state solution entails solenoidal (i.e., conservative or divergence free) dynamics that break detailed balance (and underwrite stochastic chaos ). In other words, The dynamics of systems at Non-Equilibrium Steady State are not time reversible (unlike equilibrium steady states, in which the flow is entirely dissipative). Generally, a Non-Equilibrium Steady State refers to a System with dynamics that are unchanging, or at Stationarity in some State. | ||

| Particle | An Agent consisting of Blanket State and Internal State, partitioned off from Niche. | ||

| Recognition Model | Recognition Model is the kind of Model that affords Variational Inference, which lets us calculate or approximate a probability distribution. Recognition Model is a synonym for Variational Model. In Dayan and Abbot (2001), the probability of a Hidden State (causes) given Sensory Data (effects) under some parameter. | ||

| Representation | A structural correspondence between some Random variable inside a System and some Random variable outside the System (isomorphism being the strongest kind of correspondence), such that the Systemengages in Inference carried out by the System maintains the correspondence |

Perception

| Attention | Broad sense: Generative Model that is aware of some Stimulus, reflected by its Salience Narrow sense: Attention modulates the the confidence on the Precision of Sense State, reflecting Sensory input | ...... | ...... |

| Evidence | Data as recognized and interpreted by Generative Model of Agent | ||

| Observation | The Belief updating of an Internal State registered by a Sensory input, given the weighting assigned to that class of input in comparison with weighting of the competing Priors. (This is a narrow sense of “observation,” where the Agent is “looking for this kind of input.” This sense excludes situations where (a) an incoming stimulus with these attributes has already been explained-away or pre-discounted, or (b) the prior is so strongly weighted as to exclude updating in response to any inputs (other than, perhaps, “catastrophic” ones, as may occur in e.g. fainting, hysterical blindness).) Any Sensory input, either discrete-valued or continuous-valued. (This is a broad sense.) | ||

| Perception | Posterior State Inference after each new Observation. | ||

| Salience | The extent to which a Cue commands the Attention of an Agent given their Regime of Attention |

Systems

| Hierarchical Model | A hierarchy of Estimators, which operate at different spatiotemporal timescales (so they track features at different scales); all carrying out Predictive Processing | ...... | ...... |

| Living system | Agent engaged in Autopoiesis | ||

| Multi-scale system | Realism framing of Hierarchical Model | ||

| System | Set of relations described by State space of a Model . Differentiable and Integratable in terms of Variables and functions. |

Additional Notes

- Inference = ways of figuring things out

- Michael Levin on adjunctions and active inference: Optimal reconstruction (relates to internal modeling of external environment). Laziest way to do something (relates to least action). Prediction is structure preserving transformations. Math is agentic because it makes prediction.